SEO for Law Firms

is A Different Beast.

We won’t sugar-coat it: search engine optimization and ranking on the first page of Google is tough for law firms. Top-tier attorneys in competitive spaces know what it takes to win a case, but success in the courtroom doesn’t always translate to success in growing a law firm, especially online and in the ever-changing world of digital marketing.

$1Billion

generated in leads and verdicts

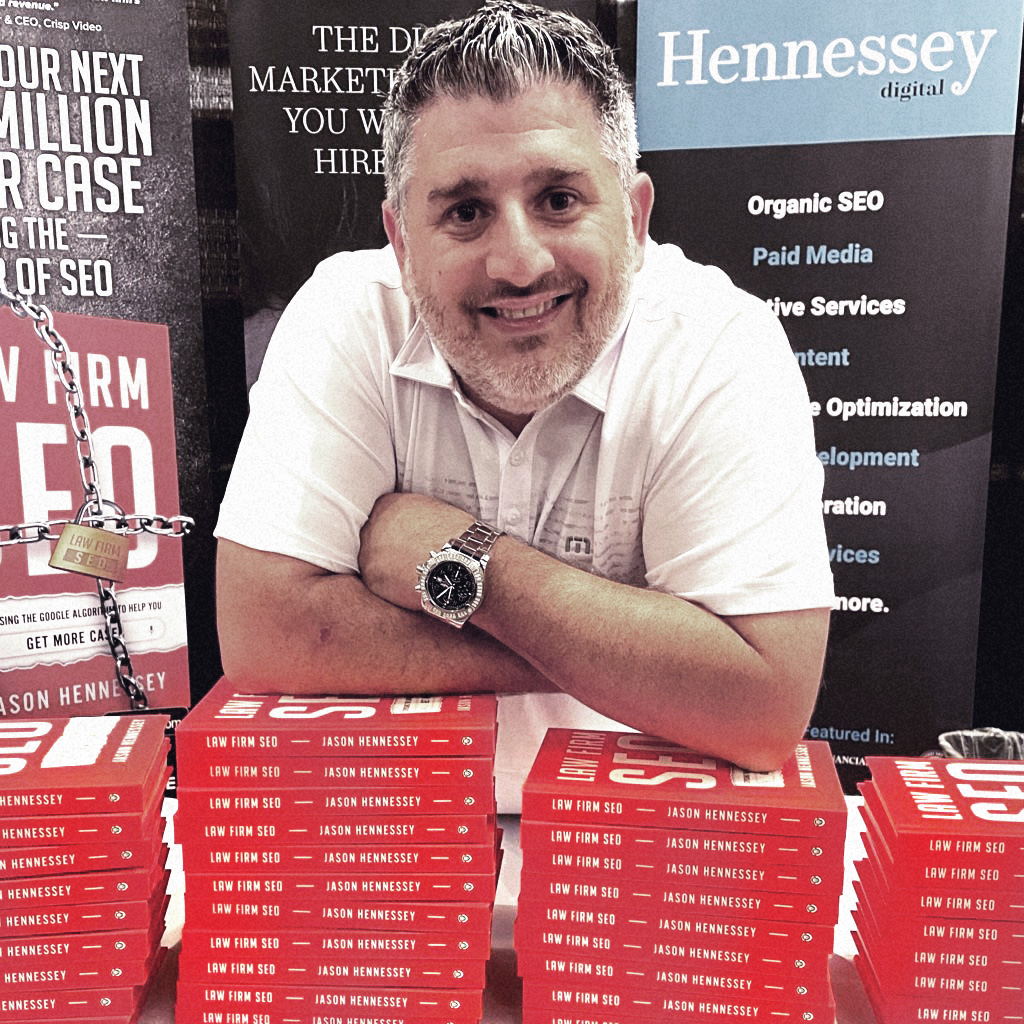

Law firms that want to dominate the legal market look to Hennessey Digital as their digital marketing and law firm SEO partner to get more quality leads and signed cases.

Work with Hennessey DigitalAny Questions?

How is SEO for lawyers different from other SEO?

SEO for law firms is a hyper-competitive space. There’s a high volume of local and national

lawyers competing for online real estate in the same market. Additionally, a person’s needs

for legal

counsel are so personal and specific that their online search behavior and words they choose to

describe their situation or need have a broader range of variety than they normally would for other

services.

Fierce competition makes SEO for lawyers a difficult space to win. Because leads can be so

valuable,

law firms spend thousands of dollars on SEO strategies to optimize their websites so that they

appear in organic search results for top keywords.

What should I be considering with law firm SEO?

When you hear “law firm SEO,” there are multiple components at play, including:

- Website and SEO audits: keyword research, URL structure, content

- Local SEO: Google My Business, Google Maps, local rankings

- Technical SEO: site speed, site architecture, accessibility

- Content: blog, FAQs, multimedia digital content

- Backlinks: digital PR, press releases

How does SEO for law firms compare to PPC (pay-per-click ads)?

Much is said about the benefits and drawbacks of SEO and PPC for law firms. Hennessey Digital helps firms with successful

strategies for both! A law firm digital marketing budget will depend on several factors, including

the firm’s target geographical location, law practice area(s), and competition.

SEO and PPC work in tandem to bring in more leads and grow a business. Because consumers

increasingly find and select an attorney based on organic search results, law firm SEO is a better

investment for the long term. Paid

media or pay-per-click ads

generate

short-term gains and leads.

Some clients do both SEO and PPC; some use only one or the other. Choosing the right mix really

depends on your law firm's goals and expectations.

How much should law firm SEO cost?

Like most law firm digital marketing scenarios, it depends. It’s important to hire the right

agency

to work with your law firm and help you achieve your business goals. Agencies like Hennessey Digital

have depth of experience in consumer behavior, digital marketing technologies, and analytics.

We always stay on top of changes by Google and other platforms that can affect how your website

performs for your business. That said, factors that affect how much SEO for law firms can

cost

include:

- Services provided

- Market/geographic area

- Practice areas of the firm

- Agency expertise

- Targeted ROI

Why is choosing the right law firm SEO agency important?

As we say frequently around here, SEO is a long game. When we sign a new client, we start a

new relationship that we design for the long term.

It’s important to choose an agency that understands your needs and explains what’s happening behind

the scenes. A digital marketing agency that works with lawyers is familiar with the nuances of

law

firm SEO.

What are other ways to market my law firm besides SEO?

In addition to law firm SEO, Hennessey Digital helps top firms grow through:

Real Case Studies

on SEO for Lawyers

The bottom line: Hennessey Digital helps top law firms grow.

How do we do it?

Through cutting-edge SEO strategies designed by the best minds in digital marketing. (And a lot of hard work.)